Stable Diffusion Torch Is Not Able To Use Gpu – Fixed In 2023

In the rapidly changing world of technology, where new ideas keep coming, there’s a unique device called the ‘Stable Diffusion Torch.’ It’s a special tool known for its precise flame control. But here’s the surprise: it can’t use GPU technology

Torch incompatibility with gpu, apps not getting support from gpu(python users),algorithmic complexity,memory demands, data transfer overheads might be the reasons behind stable diffusion.

Let’s discover why people are talking about this strange torch and why it’s capturing the interest of both fans and experts.

Understanding Stable Diffusion Torch:

Stable Diffusion Torch is a framework used for generative modeling, primarily designed for applications such as image synthesis, style transfer, and other generative tasks.

It leverages diffusion models, a class of generative models that excel at generating high-quality images. Despite its prowess in generating stunning visuals, Stable Diffusion Torch faces limitations when it comes to utilizing GPUs.

Reasons Behind Stable Diffusion Torch Is Not Able To Use Gpu:

1. Torch Incompatibility With Gpu:

Torch is renowned for its flexibility and user-friendly nature, making it a widely favored tool. However, it faces a challenge when it comes to working smoothly with GPUs, which are essential for certain tasks. This issue between Torch and GPUs needs a closer look.

The reason for this problem is that Torch and GPUs handle memory differently. Torch mainly uses CPU memory, but for powerful machine learning, GPUs need to manage memory efficiently. This mismatch can slow down tasks and make using GPUs for training or running models with Torch less efficient.

2. Apps Not Getting Support From Gpu(Python Users):

If you use Python and Torch for deep learning, you’re familiar with its strength. But here’s the catch: Torch doesn’t always work well with certain GPUs, and that can be frustrating. This means training models with GPUs might not be as fast or efficient.

One reason for this problem is that some older GPUs don’t meet the requirements for Torch. Also, Torch relies on CUDA, which helps it use GPUs, but some GPUs don’t support certain CUDA versions. So, you might not get the most out of your hardware when using Torch

3. Algorithmic Complexity:

Torch uses neural networks that do lots of math, like multiplying matrices and doing convolutions. These need the power of modern GPUs to work fast.

But not all GPUs are the same, and some can’t handle these tasks well because of differences in how they use memory or the hardware they have.

This mismatch between what Torch needs and what some GPUs can do can cause errors or make models run slower on some systems.

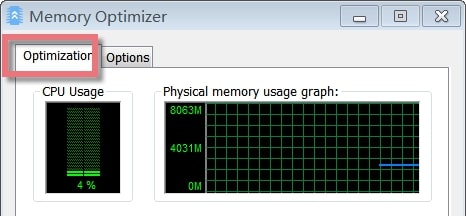

4. Memory Demands:

GPUs have less memory than CPUs, and that can be a problem for Torch models that need lots of memory. As deep learning models get bigger, they need even more memory, causing problems with certain GPUs.

This memory issue makes it hard to train big models or use large datasets with GPUs. It can slow down training or even make Torch programs crash.

5. Data Transfer Overheads

A big problem for users using Torch with a GPU is that the computer parts (CPU and GPU) get too hot when they share data. This happens because a lot of data moves between them, making both parts work very hard. Working harder uses more energy and makes the temperature go up that also affects the torch system.

How To Solve The “Stable Diffusion Torch Is Not Able To Use Gpu ”error?

1. Check Gpu Availability:

Make sure your computer has a GPU that’s compatible with deep learning frameworks. Not all GPUs work with these frameworks, so check if your GPU supports CUDA.

2. Install Gpu Drivers:

Make sure your computer has the right drivers for your GPU. You can get the latest drivers for your GPU from the website of the company that made it, like NVIDIA or AMD.

3. Install Cuda Toolkit:

Ensure your system meets the minimum requirements for CUDA Toolkit.

- Compatible NVIDIA GPU

- Supported operating system

- Verify your system’s compatibility.

- Go to NVIDIA’s official website

- Download the latest version of the CUDA Toolkit.

4. Install Cudnn:

Follow these steps carefully:

- Download the appropriate version of cuDNN from the NVIDIA website.

- Extract the downloaded cuDNN file to a folder on your computer.

- Navigate to the extracted folder.

- Copy the content files from the cuDNN folder.

- Paste the copied files into their respective directories, such as CUDA Toolkit for Visual Studio directories.

5. Check Pytorch Or Tensorflow Installation:

Make sure you’ve got PyTorch or TensorFlow with GPU support installed. You can usually do this by using pip to install the version that works with your GPU. For PyTorch, you can get the CUDA-supported version with this command:

pip install torch==your_preferred_version

If you encounter problems with PyTorch or TensorFlow, remove conflicting software and reinstall only what’s necessary for deep learning.

6. Update Your Deep Learning Framework:

Start by checking if there is a new version of Stable Diffusion Torch available. If yes, make sure it supports your current GPU driver or vice versa. Often, updating both the framework and driver simultaneously can eliminate this problem entirely.

Remember that different frameworks may have specific requirements for GPU drivers, so it’s essential to check their compatibility before proceeding further.

7. Restart Your System:

Sometimes, a straightforward solution for addressing GPU-related problems involves restarting your computer, particularly when updates have been made to drivers or system configurations

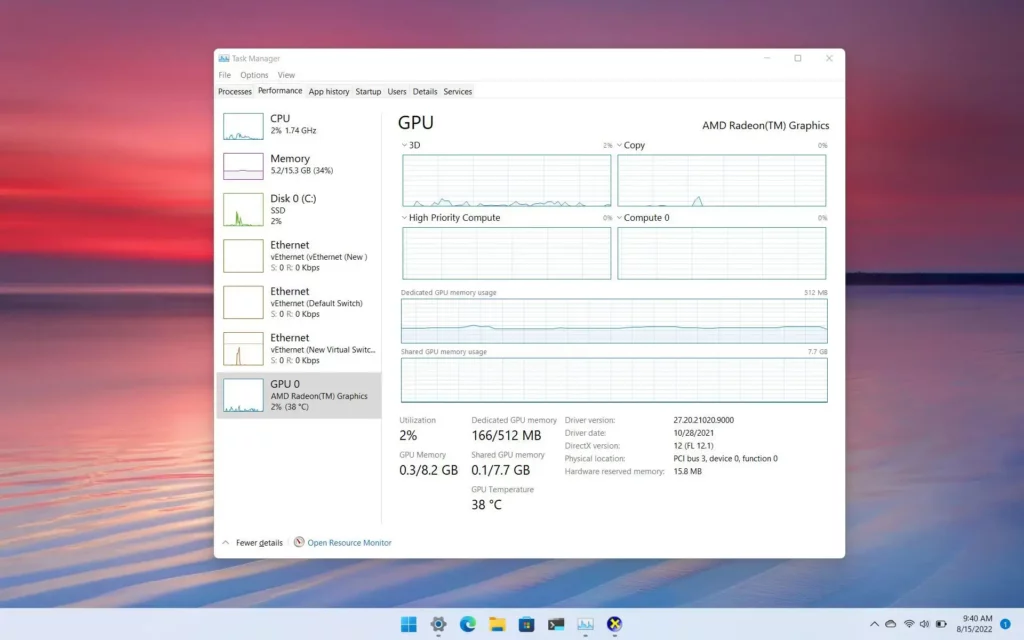

8. Check Gpu Usage:

Make sure your GPU isn’t being used by any other programs or processes, as this could prevent your deep learning framework from accessing it.

9. Verify Gpu Visibility:

Run GPU-related commands, such as nvidia-smi (NVIDIA GPUs) or rocm-smi (AMD GPUs), to make sure your GPU is visible and reachable. This will display details about your GPU, including the driver version and GPU usage.

10. Check System Environment:

Ensure that the CUDA_HOME and LD_LIBRARY_PATH environment variables on your system are correctly set to the CUDA and cuDNN installs.

11. Use Gpu Device In Your Code:

Indicate specifically in your Python code that you want to use the GPU device. Using PyTorch, you can:

device = torch.device(“cuda” if torch.cuda.is_available() else “cpu”)

Then, be careful to use model.to(device) and data.to(device) to transfer your model and data to the GPU.

12. Check For Errors And Warnings:

Check if there are any error messages or warnings in your code that explain why the GPU isn’t working.

If you still have problems after trying these steps, it’s a good idea to:

- Check the documentation and support resources for the deep learning framework you’re using.

- Visit relevant forums or communities for more help with troubleshooting.

Efforts To Make Gpus Better For Stable Diffusion:

1. Algorithm Refinement:

As technology advances, we want faster and better computing. One exciting area of progress is making graphics processing units (GPUs) work even better for diffusion simulations.

Originally, GPUs were for graphics, but now they’re super useful for science too. People are finding smart ways to use GPUs for stable diffusion simulations.

They’re making new algorithms and tricks that make computers really good at this. Scientists keep making things even better by improving the algorithms that let GPUs work their magic.

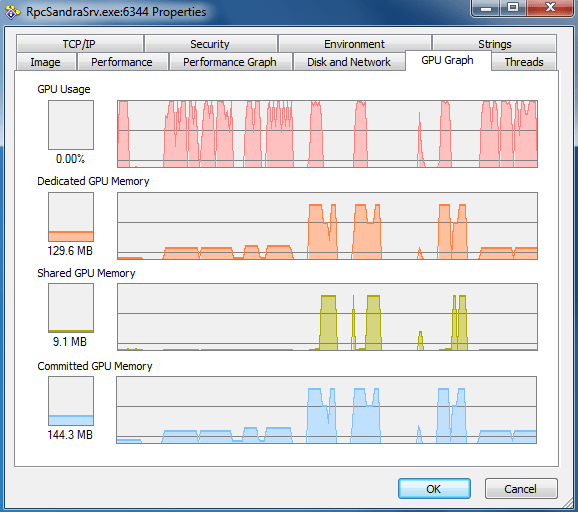

2. Memory Optimization:

GPUs are great at complex visuals, but handling memory for stability is tricky.

Recent efforts aim to make diffusion smoother with:

- Enhancing Cache Coherence: Reduce cache misses by improving data movement within GPU memory levels. This stores more data in faster memory, making diffusion tasks better.

- Exploiting Diffusion Algorithm Parallelism: Design special memory setups just for diffusion. This reduces memory delays, making diffusion more efficient and stable.

3. Gpu-Aware Libraries:

Efforts to improve GPUs for stable diffusion led to GPU-aware libraries.

- These libraries are made to boost performance and efficiency for GPU-dependent tasks.

- They aim to make diffusion algorithms faster and more stable.

- GPU-aware libraries handle large data sets well, unlike traditional CPU-based methods.

- They offload intensive tasks to GPUs, allowing larger data without slowdowns.

- These libraries also scale efficiently across multiple GPUs or machines in distributed computing.

- By using GPU parallel processing, they distribute work for faster execution and better system performance

4. Hybrid Computing:

- Researchers and developers are finding ways to enhance performance and stability.

- Specialized hardware accelerators are used to offload specific tasks from the GPU.

- This lets the GPU focus on processing graphics, making diffusion more efficient and stable.

- Programming models are advancing to integrate workflows across different devices.

- This allows both CPUs and GPUs to work together, opening possibilities for complex simulations and data analysis.

- Combining hardware acceleration and optimized programming creates exciting opportunities for hybrid computing in science, AI, and high-performance computing.

Conclusion:

In conclusion, the stable diffusion torch is undoubtedly a powerful tool for various applications. However, the lack of compatibility with GPU torch and the absence of support from Python users have hindered its full potential.

Moreover, the complex algorithms and memory demands requirements have proved to be additional challenges. Lastly, the issue of data transfer overheads further limits its effectiveness.

Despite these setbacks, it is important to continue exploring alternatives and finding innovative solutions which are given above to overcome these obstacles. By doing so, we can unlock the true power of the stable diffusion torch and revolutionize its capabilities in a variety of fields.

Frequently Asked Questions:

1. What Are The Potential Applications Of Stable Diffusion Torch Simulations When Gpu Compatibility Is Optimized?

Optimized GPU compatibility with Stable Diffusion Torch simulations can have applications in various fields, including scientific research, artificial intelligence, high-performance computing, and engineering, enabling faster and more accurate modeling of complex phenomena.

2. Can I Still Achieve High-Quality Flame Control Without Using A Gpu?

Absolutely! A stable diffusion torch relies on the proper mixture of fuel and oxidant gases, along with precise control of flow rates and pressure, to achieve optimal flame control.

3. How Can I Ensure Maximum Efficiency When Using A Stable Diffusion Torch Without Gpu Support?

To maximize efficiency, it is important to understand the combustion properties of your chosen fuel gas and oxidant gas combination. Properly calibrating flow rates, maintaining clean nozzles, and following manufacturer’s guidelines will help optimize performance.

4. Can Cloud-Based Gpu Resources Be An Alternative Solution For Stable Diffusion Torch Simulations With Gpu Compatibility Issues?

Yes, using cloud-based GPU resources can be a practical solution to overcome GPU compatibility challenges. Cloud providers offer a range of GPU options, allowing researchers and developers to select GPUs that align with their simulation requirements without hardware constraints.

5. Are There Any Community-Driven Initiatives Or Open-Source Projects Addressing Gpu Compatibility Issues With Stable Diffusion Torch?

Yes, the deep learning community often collaborates on open-source projects and initiatives to improve GPU compatibility for various applications, including Stable Diffusion Torch. Exploring online forums, GitHub repositories, and community-contributed resources can provide valuable insights and solutions.