Detect The Available GPUs and Deal With Any System Changes – Tackle System Adjustments!

In today’s tech world, using GPUs is important for many things like games, learning, and science. To make your programs work well, it’s vital to spot GPUs and adjust when things change.

This error occurs due to software bugs, hardware configuration, driver compatibility, resource conflicts, operating system updates, insufficient permissions, network or connectivity issues, and insufficient hardware resources.

In this blog post, we’ll delve into why detecting GPUs is crucial and how to handle system changes efficiently.

Possible Methods To Detect Available GPUs:

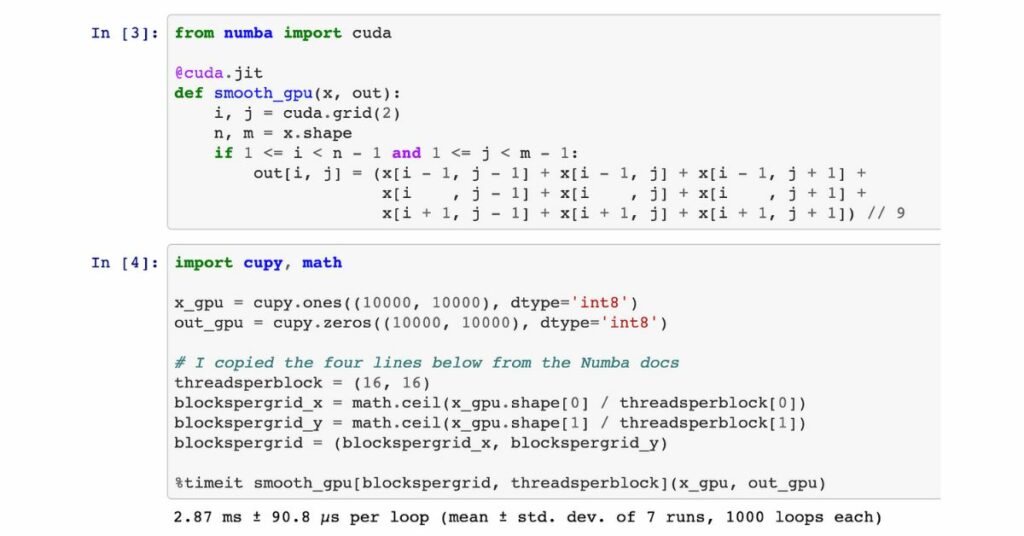

1. Using Python And Cuda:

Using Python and CUDA together gives developers effective ways to find available GPUs. You can do this through Nvidia-semi or by directly using CUDA APIs.

If you’re a developer utilizing NVIDIA GPUs, you can employ the NVIDIA CUDA Toolkit with Python libraries like PyTorch or TensorFlow for GPU detection. Below is a basic code snippet to initiate this process:

import torch

if torch.cuda.is_available():

num_gpus = torch.cuda.device_count()

print(f”Found {num_gpus} CUDA device(s).”)

else:

print(“No CUDA devices found.”)

2. Using System Apis:

Developers can discover GPUs in a computer using different methods.

- APIs like DirectX in Windows allow access to GPU properties.

- Specialized libraries such as CUDA and OpenCL enable cross-platform GPU detection.

- Python packages like PyCUDA and Tensorflow simplify GPU detection for developers.

On Linux systems, you have the option to employ system-level commands like ‘lspci’ or ‘nvidia-smi’ to display a list of accessible GPUs. For instance:

lspci | grep VGA

nvidia-smi

3. Using GPU Libraries:

Using GPU libraries is one way to detect available GPUs in a system. Libraries like CUDA and OpenCL provide APIs that allow developers to interact with GPUs and perform various computations.

Using these libraries, programmers can query the system for information about the available GPUs, including their specifications, such as memory size, clock speed, and number of compute units.

This method is beneficial for applications that require dynamic selection of GPUs based on their capabilities.

4. Using System-Level Tools:

Operating systems like Windows, macOS, and Linux often provide tools that can display information about the hardware installed in a computer. These tools typically include device managers or command-line utilities that list all the devices connected to the system, including GPUs.

By accessing these tools programmatically or parsing their output, developers can identify the available GPUs and gather information about them.

Advantages Of Detecting Available Gpus:

1. Performance Optimization:

Detecting available GPUs is vital for tasks requiring significant computational power, especially in fields like machine learning.

- It enables the utilization of parallel processing capabilities, reducing algorithm execution time.

- Multiple GPUs in modern systems can work together or independently, and detection helps allocate tasks efficiently.

- Access to specialized hardware features of different GPU models can improve performance for specific tasks.

- Examples include tensor cores for deep learning acceleration and video decoding capabilities for real-time video processing.

2. Resource Management:

Identifying available GPUs helps system admins and developers use computational resources effectively.

- This improves resource utilization, enhances performance, and reduces user waiting times.

- Detecting GPUs enables efficient workload distribution, preventing idle GPUs.

- It also supports scalability in complex systems with multiple GPUs.

- You can easily adjust computing power for peak times or manage hardware failures by redistributing workloads.

You can optimize resource allocation, preventing system overloads that could result in crashes or subpar performance in your applications.

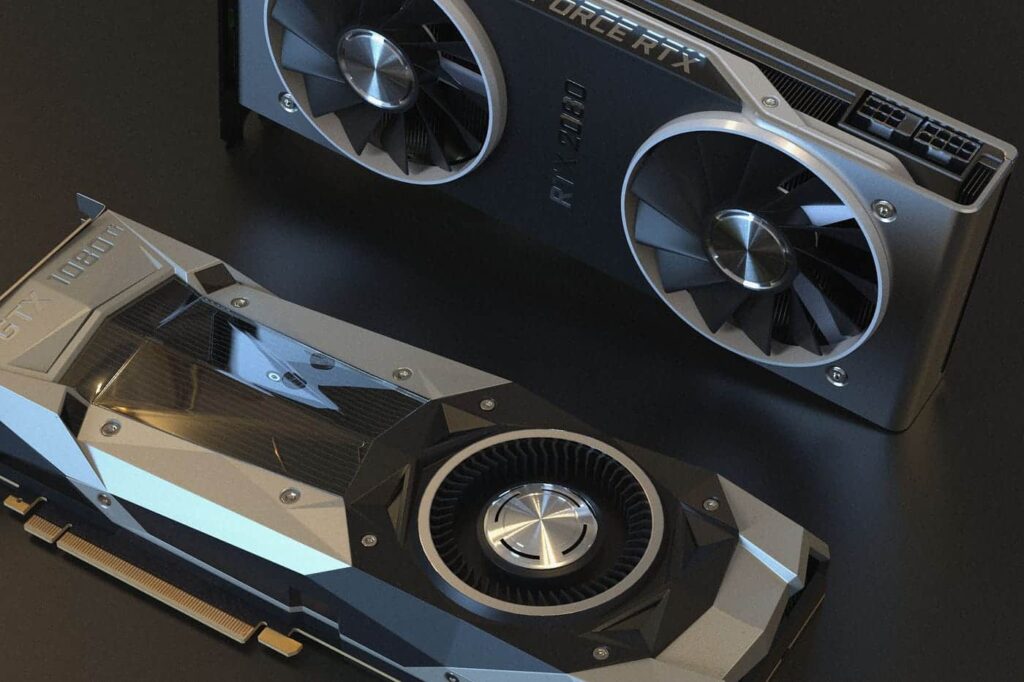

3. Compatibility:

By identifying the available GPUs in a system, developers can ensure their software works well with various hardware configurations. This is especially crucial in gaming, where users may have different graphics cards.

- Optimization: Different graphics cards have varying capabilities and performance levels. Detecting available GPUs allows the software to optimize its performance by leveraging the specific qualifications of each GPU. For example, a game can adjust graphics settings based on the detected GPU to provide the best user experience without sacrificing performance.

- Cutting-Edge Technology: Detecting available GPUs enables developers to tap into the latest technologies offered by specific graphics cards. Some GPUs support advanced features like ray tracing or deep learning capabilities, which can significantly enhance graphical quality or accelerate tasks such as AI processing.

4. Power Efficiency:

Detecting available GPUs provides power efficiency and performance optimization benefits. It allows tasks to be assigned to GPUs with lower power consumption, which is especially beneficial in resource-intensive applications like machine learning.

Identifying GPU specifications facilitates efficient workload distribution, enhancing overall performance by matching tasks to GPU capabilities.

Steps To Deal With Any System Changes:

1. Dynamic Resource Allocation:

Make your applications smarter by adjusting to system changes. Keep an eye on how the GPU is used and change the tasks it handles to avoid problems caused by too many things needing it at once

2. Robust Error Handling:

Create a strong error-handling system in your code to effectively manage scenarios where the GPU setup changes or a GPU becomes inaccessible. Implementing good error handling not only prevents crashes but also guarantees a more reliable and stable application performance.

- Graceful Handling: When software encounters errors associated with GPU detection or system modifications, it should manage them in a manner that prevents crashes or data loss

- Logging And Reporting: Effective error handling involves recording pertinent error details for debugging purposes and notifying users or developers about the issues encountered.

- Resource Management: Error handling guarantees correct resource management, such as GPU memory, to avoid resource leaks or conflicts.

3. Regular Software And Bios Updates:

Regular software and BIOS updates are essential to keep your system running smoothly and securely. But often, these changes can cause unexpected issues or disrupt the workflow.

- Backup Your Data: Always backup your important data before any updates to prevent potential disasters.

- Gather Information: Research the updates thoroughly. Look for release notes and user experiences online to understand what changes are being made and if there are known issues.

- Assess the Update: Decide whether it’s the right time to proceed with the update based on the information gathered.

- Create a Restore Point: Before making any changes, create a restore point to return to a previous state if problems occur.

- Track Changes: Record the modifications made for easier troubleshooting if issues arise later.

Following these steps can minimize the risks associated with software and BIOS updates.

4. Monitoring And Logging:

Deploy monitoring and logging tools to oversee system alterations and monitor GPU usage. This information is indispensable for diagnosing problems, enhancing performance, and halting the escalation of errors.

5. Hardware Changes:

These actions may encompass adding or removing GPUs, upgrading to a different GPU, or modifying the overall system configuration.

Frequently Asked Questions:

1. Are There Tools Available For Monitoring Gpu Utilization And System Changes?

Yes, there are several tools and software packages available for monitoring GPU utilization. Tools include NVIDIA’s Nvidia-semi, GPU-Z, and system monitoring utilities like HWiNFO.

2. What Are Some Common Challenges When Dealing With GPU Resource Management?

Challenges may include load balancing between multiple GPUs, avoiding resource contention, ensuring data synchronization, and optimizing communication between GPUs.

3. Are There Open-Source Libraries Or Frameworks For Gpu Detection And Management?

Yes, open-source libraries like OpenCL and CUDA, along with frameworks like OpenCV and TensorFlow, provide GPU support and can detect and manage GPUs in various applications.

Conclusion:

In conclusion, detecting the available GPUs and adapting to any system changes is crucial for optimizing performance in GPU-intensive applications.

Several methods can be used to achieve this, including utilizing Python and CUDA, leveraging system APIs, utilizing GPU libraries, and employing system-level tools.

By implementing these techniques, developers can ensure that their applications make the most efficient use of available GPUs, leading to improved performance and better user experiences.

So, whether you are a developer or a system administrator, it is essential to explore these methods and select the one that best suits your needs to harness the full potential of GPUs in your computing environment.