Nvidia Geforce RTX 3080 Gpu With Pytorch -Ultimate Performance

Nvidia has launched the powerful GeForce RTX 3080 GPU, promising groundbreaking graphics performance for gaming and machine learning. The key highlight is its seamless integration with PyTorch, a widely used open-source deep learning framework.

The Nvidia GeForce RTX 3080 GPU is ideal for deep learning in PyTorch due to its robust architecture. Its CUDA cores and Tensor Cores significantly boost performance, making it a top pick for researchers and developers using PyTorch in machine learning applications.

Get ready to dive into a world where stunning visuals and cutting-edge AI algorithms collide.

Understanding NVIDIA GeForce RTX 3080 And Its Key Features:

The NVIDIA GeForce RTX 3080 has taken the gaming world by storm, and it’s not hard to see why. With its impressive performance and cutting-edge features, this graphics card has become a must-have for any serious gamer.

What sets the RTX 3080 apart from its predecessors is its use of Ampere architecture, which delivers significant improvements in efficiency and performance. This means faster frame rates, smoother gameplay, and more detailed visuals.

1. Key Features:

- CUDA Cores:

The abundance of CUDA cores in the RTX 3080 enhances parallel processing, making it well-suited for deep learning tasks.

- Tensor Cores:

Specialized Tensor Cores improve the GPU’s capacity for carrying out matrix multiplications, an essential process in training neural networks.

- Ray Tracing:

The RTX 3080 stands out, particularly for its ray-tracing capabilities. Ray tracing technology enhances games by providing more realistic lighting effects and reflections, resulting in a highly immersive experience.

Thanks to the RTX 3080’s specialized hardware designed for ray tracing, gamers can enjoy visuals that rival the lifelike quality previously seen only in Hollywood movies.

- Memory Bandwidth:

The high-bandwidth GDDR6X memory ensures rapid data access, essential for training extensive neural networks.

PyTorch: A Brief Overview And Its Key Features:

Thanks to its flexibility and ease of use, PyTorch has quickly become one of the most popular deep-learning frameworks.

Its dynamic computational graph allows easy debugging and experimentation, making it a favorite among researchers and practitioners.

But what sets PyTorch apart is not just its technical capabilities – it’s also the strong community that surrounds it.

1. Key Features:

- Dynamic Computational Graph:

PyTorch’s dynamic computational graph simplifies model debugging and experimentation, making it a favored choice among researchers.

- Eager Execution:

Eager execution enables users to evaluate operations immediately, offering a more interactive and flexible approach to model development.

- TorchScript:

PyTorch supports converting models into TorchScript, facilitating deployment in production environments and mobile devices.

Benefits of using RTX 3080 with PyTorch:

- Parallel Processing Power:

A large number of CUDA cores for efficient parallel processing.

- CUDA Support:

Robust support for CUDA in PyTorch, allowing offloading of computation to the GPU.

- High Memory Bandwidth:

High memory bandwidth for handling large datasets and complex model architectures.

- Tensor Cores for Mixed-Precision Training:

Tensor Cores accelerate mixed-precision training in PyTorch.

- Ray Tracing for Visualizations:

Hardware support for real-time ray tracing for realistic visualizations and simulations in computer vision tasks.

- Fast Data Loading with NVLink:

NVLink support for high-speed data sharing between GPUs during training benefits large-scale models.

- Community Support:

Widespread adoption of NVIDIA GPUs, including RTX 3080, in the deep learning community with extensive resources and documentation.

- CuDNN Optimization:

PyTorch leverages cuDNN optimized for NVIDIA GPUs, ensuring efficient implementation of deep learning operations.

Integrating NVIDIA GeForce RTX 3080 with PyTorch:

1. Installing GPU Drivers:

Install the latest NVIDIA GPU drivers before starting with PyTorch to ensure compatibility and the best performance.

2. Setting Up CUDA Toolkit:

To leverage GPU acceleration through CUDA, install the CUDA toolkit that is compatible with your GPU, allowing PyTorch to effectively utilize the CUDA cores of your RTX 3080.

2. Installing PyTorch with GPU Support:

Install the PyTorch version that supports GPU to harness the parallel processing capability of the RTX 3080. The installation command could resemble the following:

pip install torch==1.9.0+cu111 torchvision==0.10.0+cu111 torchaudio==0.9.0+cu111 -f https://download.pytorch.org/whl/torch_stable.html

3. Verifying GPU Availability:

Use the provided code snippet to confirm that PyTorch recognizes and utilizes the RTX 3080:

- import torch

- print(torch.cuda.is_available())

- print(torch.cuda.get_device_name(0))

Optimizing PyTorch Models for RTX 3080:

- Utilizing Mixed Precision Training:

Enhance the utilization of Tensor Cores in the RTX 3080 by enabling mixed-precision training. This approach can substantially accelerate training durations while preserving model accuracy.

- Data Parallelism:

Maximize the wealth of CUDA cores by implementing data parallelism. Distribute the training data across multiple GPUs to expedite the training process.

- Model Parallelism:

Implement model parallelism for larger models that might exceed GPU memory limits. Distribute the model across multiple GPUs to handle more extensive datasets and computations effectively.

GeForce RTX 3080, CUDA Capability sm_86, and PyTorch Installation:

The error message stating, “GeForce RTX 3080 with CUDA capability sm_86 is not compatible with the current PyTorch installation,” indicates that the existing PyTorch installation lacks support for the specific CUDA capability associated with the RTX 3080. To resolve this error, follow these steps:

1. Update PyTorch:

Verify whether a more recent version of PyTorch is available that incorporates compatibility for sm_86. Developers frequently release updates to address issues and accommodate support for the latest hardware.

2. CUDA Toolkit Update:

Confirm that the CUDA Toolkit installed on your system is current. The CUDA Toolkit supplies essential software components for GPUs with CUDA capability. Mismatched versions between the CUDA Toolkit and GPU architecture can lead to compatibility problems, so keeping them in sync is crucial.

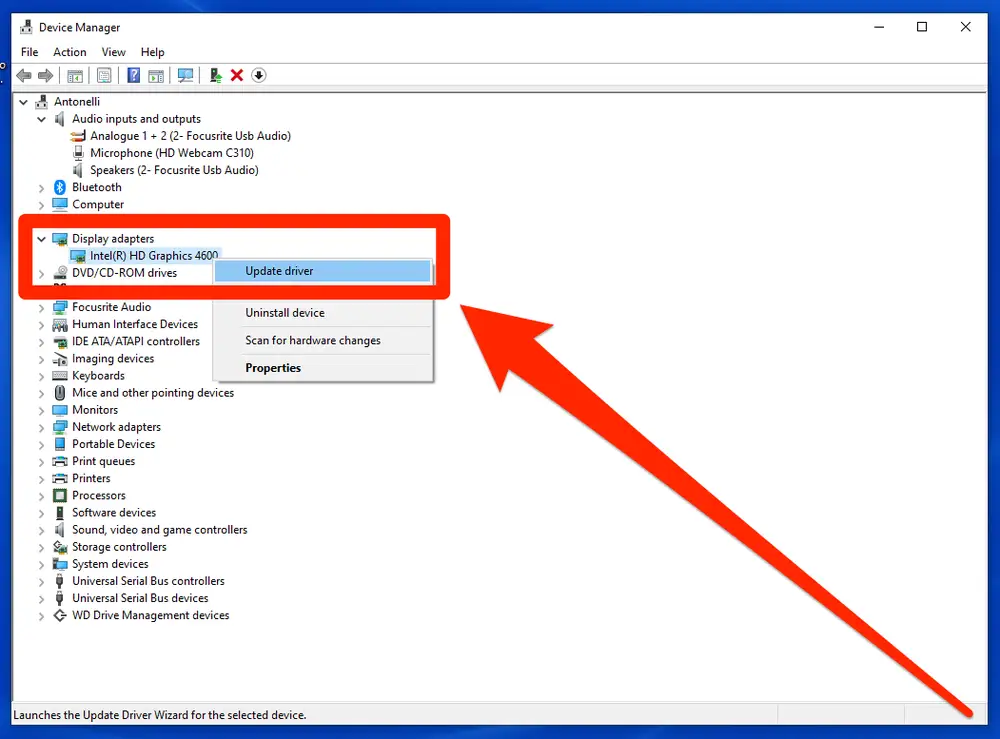

3. Driver Updates:

Ensure that you have the most recent GPU drivers installed. NVIDIA regularly issues driver updates to improve performance and tackle compatibility issues. Updating your drivers can often resolve problems related to GPU compatibility.

4. Community Forums and Documentation:

Investigate PyTorch community forums, GitHub issues, and official documentation to tap into the collective knowledge of other users who may have faced similar problems.

Developers often share valuable insights, solutions, workarounds, or patches for compatibility issues in these forums, which can be immensely helpful in resolving specific challenges.

5. Consider Alternative Frameworks:

Given your compatibility issues, it might be worth exploring alternative deep-learning frameworks, such as TensorFlow.

Different frameworks may have varying levels of support for new hardware architectures, and switching to an alternative could be a practical solution to address the current limitations you’re facing with PyTorch.

Conclusion:

In conclusion, the NVIDIA GeForce RTX 3080 GPU with PyTorch is a powerful combination that offers numerous benefits for machine learning and deep learning tasks.

With its advanced architecture and cutting-edge features, the RTX 3080 provides unprecedented performance and efficiency, enabling faster training times and more accurate models.

With the PyTorch framework, developers can leverage its intuitive interface and extensive library of pre-trained models to streamline their workflows and achieve optimal results.

Integrating RTX 3080 with PyTorch opens new possibilities for researchers, data scientists, and AI enthusiasts alike.

So why wait? Harness the power of RTX 3080 with PyTorch today and unlock the full potential of your AI projects.

Frequently Asked Questions:

1. How Does DLSs Benefit Pytorch Users On The Nvidia Geforce Rtx 3080?

DLSS (Deep Learning Super Sampling) technology on the Nvidia GeForce RTX 3080 enhances performance using AI-based algorithms to upscale lower-resolution images in real time. This can significantly improve training speed and efficiency when working with large datasets in PyTorch.

2. Can I Use Multiple Nvidia Geforce Rtx 3080 Gpus Together For Parallel Processing In Pytorch?

Yes, you can use multiple Nvidia GeForce RTX 3080 GPUs together via SLI (Scalable Link Interface) technology to increase the computational power and speed up the training of deep learning models in PyTorch.

3. Is Pytorch The Only Machine Learning Library Compatible With The Rtx 3080 Gpu?

No, but PyTorch is popular due to its dynamic computational graph and ease of use. TensorFlow and other frameworks can also be configured to work with the RTX 3080.

4. What Role Does The Cuda Toolkit Play In Optimizing The Rtx 3080 For Pytorch?

The CUDA Toolkit is crucial for PyTorch’s GPU acceleration. Installing the appropriate version compatible with the RTX 3080 ensures effective utilization of its CUDA cores for parallel processing.

5. How Do I Handle Memory-Intensive Models That May Not Fit Entirely Into The Rtx 3080’s Memory?

For larger models, consider implementing model parallelism, distributing different parts of the model across multiple RTX 3080 GPUs to overcome memory limitations.